Terms like ‘AI’ and ‘machine learning’ are slowly becoming a part of our everyday world. From research documents to your news feed, chances are you’ve seen these terms appearing more often in recent years.

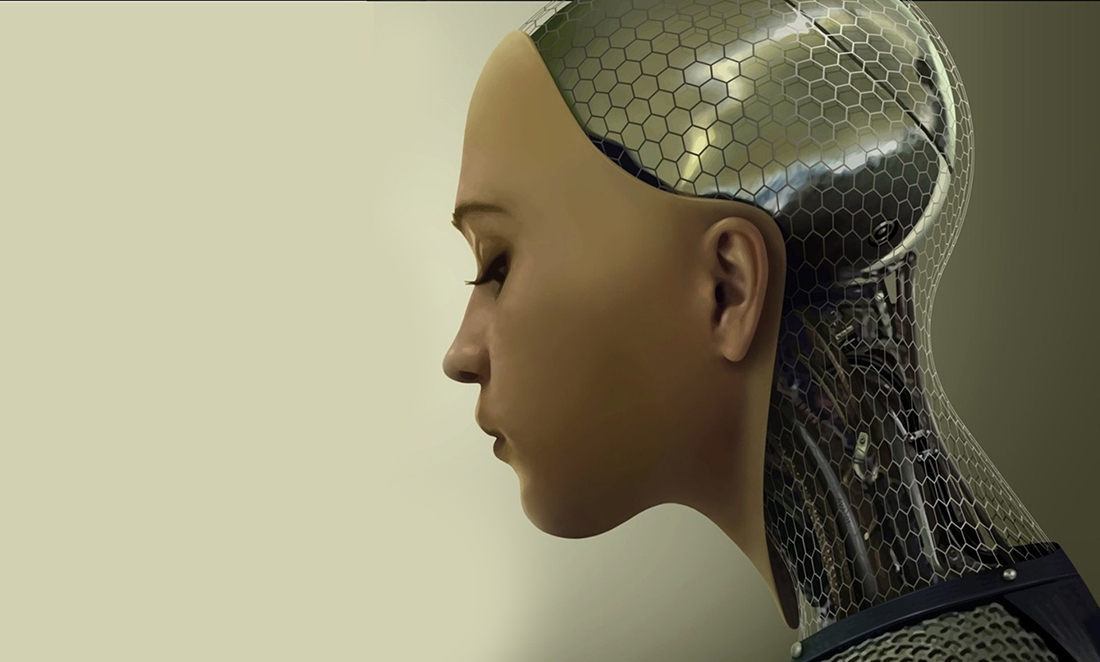

While these ideas are becoming more mainstream, they start to raise ancient philosophical questions that we still have yet to answer. Questions like “What does it mean to be human?” or “How do we quantify harm?”

For some applications of AI, answering these age-old questions is critical. In cases where risks are involved, like driverless cars, having an answer can be a matter of life or death.

Robot ethics

These questions aren’t being ignored as the drive towards automation continues. Researchers around the world have been looking at how to answer these questions.

One such researcher is Anna Sawyer, who is writing her PhD on robot ethics at the University of Western Australia.

Anna says it’s about how machines make decisions with serious outcomes and the frameworks we should have in place to guide those decisions.

Anna’s work has seen her advise the team behind I Am Mother, an Australian-made science fiction film exploring some of these issues.

Humans vs robots

A key part of robot ethics is the difference between human and robot decision making.

In the case of trying to avoid an accident, humans make reactive decisions with limited time and information. But robots can make calculated, proactive decisions, potentially with more information.

But here’s the catch – we have to decide how robots are making those decisions. And it’s forcing us to make some very uncomfortable decisions in the process.

Like deciding between a car with two kids and a parent hitting a school bus full of children or hitting a wall to avoid the bus.

Or deciding to save a younger passenger at the expense of an older person who has less life to live.

It also brings about a discussion around reducing harm. When does reduction go too far? Or an example Anna gives – would we want to prevent a stubbed toe at the cost of a priceless piece of art?

This dilemma is at the core of the discussion on robot ethics.

Big data to the rescue?

You might think the solution lies in more data, but it can complicate matters even further.

Think about the personal data Google or Apple might have about you from your phone and social media use. Now consider they’re getting into the driverless car business.

Anna says this data has the potential to be used to make decisions in life or death situations such as a car crash.

Going back to the choice between sacrificing an older person or a younger one, what would happen if the older person had a Nobel Peace Prize? And the younger person had a violent criminal record?

“Does that change – and should that change – the way that the vehicle acts?” Anna asks. “If it does, how do we then program it?”

The future

We don’t have the answers yet, so how do we teach a robot to answer them?

Like many ethical questions, it comes down to an age-old saying.

“Just because we can do it doesn’t mean we should do it,” Anna says.

“But if we are going to do it, we should make [sure] as many people as possible are involved in that conversation.”

We often forget that the future, by definition, is always yet to be written. But if technology continues in this direction, we might not have long to work it out.