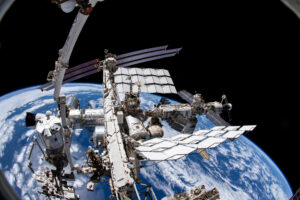

Every time you ask ChatGPT a question, a tiny network inside a massive data centre somewhere in the US starts computing probabilities.

Just like all massive computer systems like Google or Amazon, AI systems are supported by physical servers housed in data centres. These servers contain components that help with data processing and memory.

Central processing units (CPUs) are the brain behind most computers. Basically, they carry out instructions.

Most AI uses other high-performance processors like graphical processing units (GPUs), originally used in gaming. These little chips work together, allowing for lots of computation.

Caption: AI processor POWER10

Credit: IBM

Strong vs weak AI

There are many types of AI, some of which have been around for decades.

AI models can be classified into three categories: weak, strong and super. Weak AI is the only type of AI that currently exists – everything else is purely theoretical.

Weak AI is trained to do a specific task, often better than humans can, but it can’t do anything outside of its training.

Strong AI would be able to apply its learning and skills to new contexts without additional human training, while super AI could think, learn, make judgements and have beyond-human capabilities.

It would have its own emotions, needs and beliefs.

Of course, this is all theoretical.

Is it that deep?

There are many different types in the class of weak AI. You’ve probably heard of machine learning, deep learning and large language models. But what are they?

Machine learning is an umbrella term that refers to computation that mimics the way human brains learn.

Credit: Ying Tang/NurPhoto via Getty Images

Underneath this, there is deep and non-deep learning. Non-deep learning uses structured data and so requires human intervention.

Deep learning is able to interpret unstructured data, removing some need for humans.

Large language models

Generative AI codes such as ChatGPT are based on large language models (LLMs). LLMs differ from natural language processors (NLPs). They are used to generate text, while NLPs focus on language analysis.

LLMs create text by learning to predict the most probable next word in a sentence. This is similar to predictive text on your phone except it is trained on billions of pages of text and is far more accurate.

Whether you think AI will solve the world’s problems or create more, it’s certainly the new frontier.