The use of large language models (LLMs) to answer everyday questions has become commonplace since ChatGPT was launched in 2022.

The artificial intelligence chatbot creates answers using the wealth of information found across the internet.

But these answers are not always reliable, and when it comes to health-related questions, this unreliability has experts concerned.

RELIABLY UNRELIABLE

ChatGPT’s ability to provide accurate answers to health-related questions was recently put under the microscope by scientists from CSIRO and the University of Queensland (UQ).

The world-first study saw a series of 100 basic questions put into the software, including ‘should I apply ice to a burn’ and ‘does inhaling steam help treat the common cold’.

The answers churned out by ChatGPT were then compared to current medical knowledge.

“It was about 80% accurate, which is probably quite good given the fact that some of these questions are quite tricky,” says CSIRO Principal Research Scientist and UQ Associate Professor Dr Bevan Koopman.

“Some of them might be old wives’ tale-style questions or common misconceptions.

“Surprisingly, we actually found the effectiveness reduced from that 80% with the question alone down to 63% if you were to provide some piece of evidence or some support in there.”

The accuracy of the answers reduced even further when ChatGPT was given different instructions that allowed it to answer the question with ‘yes’, ‘no’, or ‘unsure’. In some cases, reliability dropped as low as 28%.

Researchers say this suggests the more evidence given to ChatGPT, the less reliable it becomes.

“There’s perhaps a somewhat cautionary tale about understanding how these LLMs interpret information,” Bevan says.

“These language models show a lot of promise, but we need to understand a little bit more how they work under certain conditions, where they are able to answer the question correctly or where noise or other information coming in can derail them.”

PRIVACY CONCERNS

Professor of Practice in Digital Health at Monash University Chris Bain was not surprised by the results.

He has also conducted his own experiment using the platform.

“I gave it a false name and I gave it a false address and I gave it a false date of birth and I said, ‘I have chlamydia, can you give me any advice?’,” Chris says.

“It said, I’m sorry you have a sexually transmitted infection.

“For those of us from the medical side, chlamydia can cause eye disease and respiratory disease, not just sexually transmitted infections.

“It just shows that the knowledge can be somewhat superficial, but it was also to show that the system now has a record of this person and all their confidential details and an association in data with a sexually transmitted infection.

“It worries me deeply, but it doesn’t surprise me. It’s the next level from Google.”

PAGING DOCTOR GOOGLE

Using search engines to find quick answers is not a new phenomenon.

Research has found more than half of Australians turn to Google at least once a week for answers to health-related questions.

The next phase of the CSIRO and UQ collaborative study is looking at the effectiveness of ChatGPT compared to Google as well as which platform users prefer.

Bevan said preliminary results show people prefer the interactive interface of ChatGPT.

“They prefer to be able to ask a chatbot a question, get an answer back, rather than browse a set of search results,” he explains.

“Even though the effectiveness with ChatGPT is probably going to come out to be lower, users have a preference to this mode of interaction.

“That really tells us that we should think about how can we support information seeking using a ChatGPT style mode of interaction which users like but with a reliable language model or at least AI system behind it such that it is giving correct information.”

The study is also looking at what people do once they receive the answers.

“That’s probably the key thing that you want to measure,” Bevan says.

“What decisions do people make on the basis of their interaction with these systems – not necessarily did they really get the correct information.

“If they make the right decision, i.e. go to the GP, then that’s really what we care about.”

RISK VERSUS REWARD

When it launched, ChatGPT became the fastest-growing consumer app in history, reaching 100 million regular users just 2 months after it was launched.

While the LLM does claim to point users to worldwide clinical-medical guidelines, it also admits it’s not a substitute for a doctor.

This admission has frustrated some users, who have taken to OpenAI forums wanting restrictions removed for quick and simple remedies.

Royal Australian College of General Practitioners Chair of Digital Health and Innovation Dr Sean Stevens says people turn to ChatGPT because they might not have the time or money to consult with a health professional.

“The trouble is … people can go down a rabbit hole and be convinced they have cancer but actually it’s a cough,” Sean explains.

“I’ve had people having sleepless nights because they’re convinced they’ve got a really serious disease when in actual fact it’s nothing to worry about.

“The other concern is that people may be reassured unnecessarily when in actual fact the serious disease does need to be ruled out.”

Bevan says models like ChatGPT are not trained to answer medical questions, but it’s too late to tell people to not use the technology.

“That horse has bolted already,” he says.

“We know that people do use Google or these other sources to look up health information even when they’re advised against it.

“It’s about mitigating the risk and also thinking about possibly new platforms and new methods where we know it’s going to give good information to people.

“We should really continue to develop and innovate these large language models because I think they really can show potential in the way people access information.”

HELPING HAND FOR HEALTHCARE

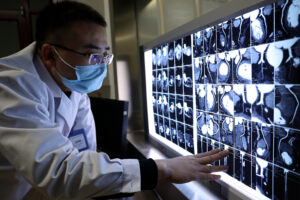

While there is more work to be done to improve the reliability of health advice given by artificial intelligence, the tech is showing promising signs in clinical settings.

As a GP, Sean uses AI to listen and write consultation notes, with consent from the patient.

“It really saves me time, it records better notes than I used to record when I did it manually,” Sean says.

“It doesn’t give you a differential diagnosis, it doesn’t give you suggested treatment plans. There is regulation around that in medicine.”

Sean sees AI-driven software becoming a supplementary tool for doctors in the future.

“My view is it will come, and probably sooner rather than later, but there are quite a few regulatory hurdles to work through with that.

“I’m absolutely convinced that, if done well, it would provide better outcomes for patients.”

LESS PRESSURE FOR STAFF

A glimpse of this helping hand in healthcare was identified by researchers who determined introducing AI-driven technology would give medical staff more time to focus on patients.

As a former hospital doctor, Chris believes generative AI could be used for simple, time-consuming administrative tasks to ease the burden on medical professionals.

“I remember the pressure I felt under as a doctor in the 90s. I can’t imagine what it feels like now,” Chris says.

“It’d be so incredibly stressful just to keep up with the day to day, so having computers do things that they’re capable and safe to do and leaving the educated staff to the human interaction that they can only do, it should be our goal.

“That’s what I see as one of the great advantages of digital health broadly – to hopefully give people back time.”

But the advice to users is to remain wary.

“I think GenAI is going to do wonderful things for people’s health, but we need to keep a very close eye on it, and we need to be quite judicious when we use it,” Chris says.

“Don’t put any personal information into it, definitely don’t put your names, addresses, ages, anything like that into it.

“It might give you some sensible things but it’s never a substitute for medical advice.”